AI pick-and-shovel plays

Tuesday 14 February 2023

Investing insights

With the release of Chat GPT late last year, AI has headed back up the hype cycle. And while we’ve been here many times before, this time feels different. That said, we are early in a rapidly evolving technological landscape. It’s difficult to know where it will be next year, but almost impossible to see even five years out.

So, what is the best way to play the AI trend and benefit from companies succeeding in the sector? We think a prudent strategy is to invest in companies building the tools to sustain the industry’s growth. They’re known as the pick-and-shovel plays. We think the structural backbone of AI is built on cloud computing and semiconductors. From the companies we cover at Swell, we have six worthy of consideration : three cloud providers – Amazon, Microsoft and Alphabet; and three semiconductor makers – Nvidia, TSMC and ASML.

Why cloud providers?

AI models such as large language models (LLMs) need to run in vast data centres. Nvidia CEO Jensen Huang calls them, ‘AI factories’ and their growth has validated the benefits of scaling these models. Scaling leads to a phenomenon known as emergence. As the LLM increases in size, the AI exhibits sudden non-linear breakthrough abilities. It shows a sudden spike in improvement in a specific and often unpredictable capability.

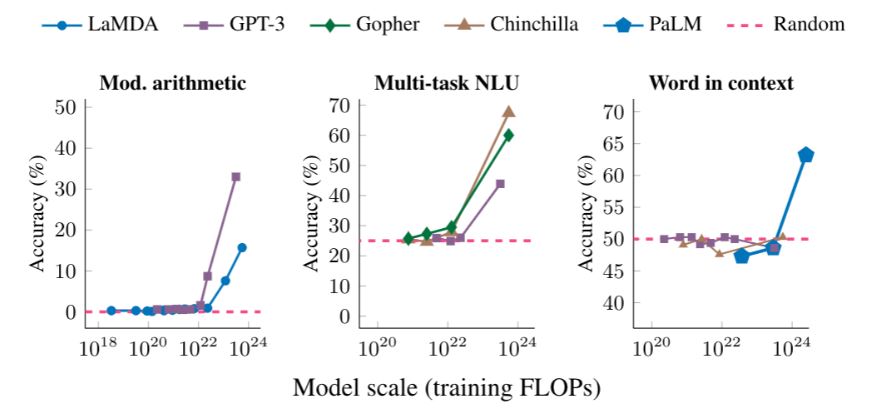

This is why LLMs like OpenAI’s GPT3 excel at a range of tasks. GPT3 has 175 billion parameters and is competent at text comprehension, language translation and even writing code. The charts below show the spike in improvement as LLMs scale, “as measured by floating point operations (FLOPs), or how much compute was used to train the language mode”.

Examples of emergence in various Large Language Models

Source: Google Blog – Characterizing Emergent Phenomena in Large Language Models, November 2022

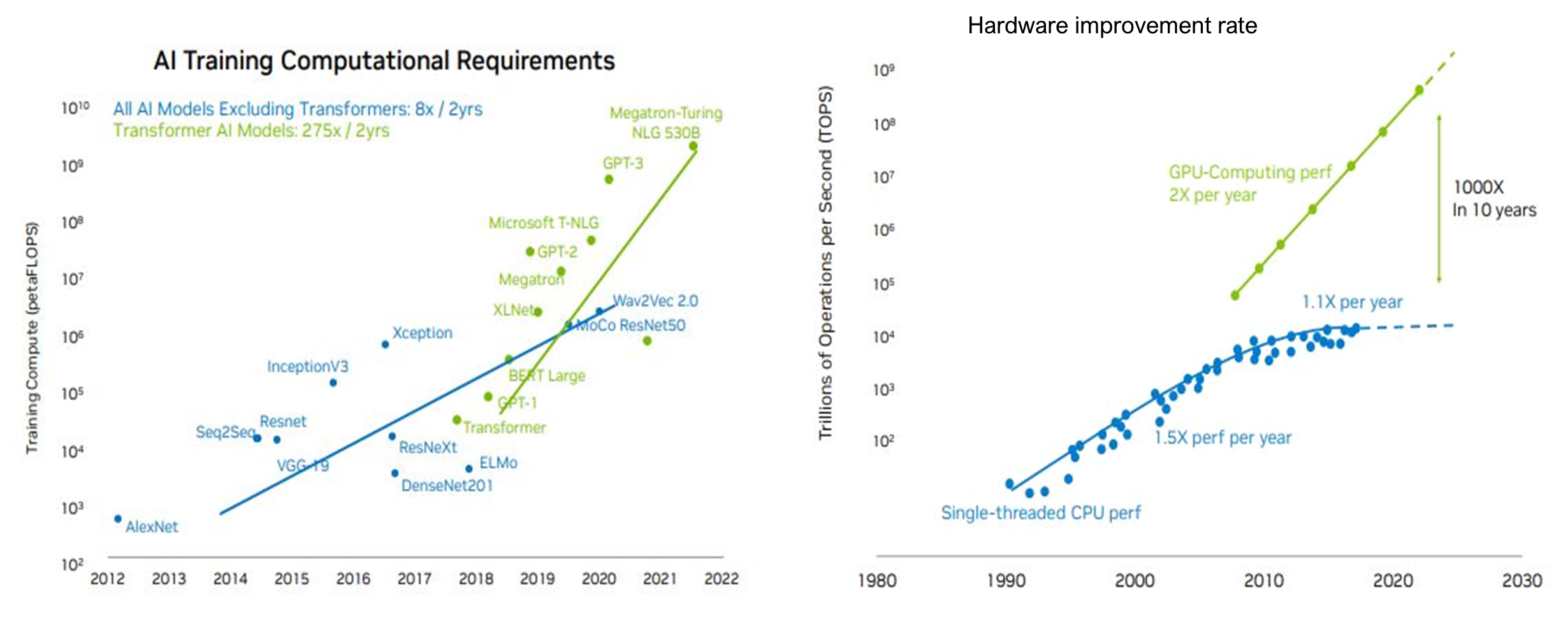

This exponential amplification in a model’s capabilities is driving the race for larger models. The largest deep learning models increased from 98 million parameters in 2018 to 540 billion today. Moreover, the computational requirements to train the models is growing at a rate of 275x every 2 years. Obviously, the cost of training and running the models grows with input scale, making LLMs affordable for only the largest tech companies or well-funded start-ups.

The current paradigm is more suited to a centralised, cloud-based model, where the AI is trained and run in the cloud as opposed to on-device. Hence the large public cloud providers can provide the infrastructure that powers the world’s AI applications. Amazon, Microsoft, and Google are among the largest public cloud providers globally.

Growth in model size is outpacing improvements in compute efficiency driving costs to train and run AI models through the roof. Source: Nvidia investor presentation, November 2022

Why semiconductor companies?

High-end semiconductors, primarily GPUs (graphics processing units) are critical to training and running AI models. Demand from AI is expected to help drive industry revenues past $1 trillion by 2030.

Nvidia is the leader in GPU design, and has a powerful moat built from its CUDA platform. GPUs are used in roughly 30% of existing data centre servers but Nvidia expects this to approach 100%. The estimate assumes AI use increases across the board, which is probable. The sheer demand for computing power means Nvidia’s design supremacy is secure for the foreseeable future. It’s long-term risk is the well resourced big tech companies investing in designing their own AI chips.

Two companies would benefit either way, TSMC (Taiwan Semiconductor Manufacturing Company) and ASML (Advanced Semiconductor Materials Lithography). Whether it’s Nvidia GPUs or big tech’s custom chips, chances are TSMC is making them and using ASML equipment to do so. TSMC is the frontrunner in leading-edge semiconductor manufacturing, with only two real competitors. ASML is literally the only company in the world capable of making its EUV (extreme ultraviolet) machines.

Both companies are not without risk though. For TSMC the geopolitical risk is potentially existential. But if you can stomach that, it trades on 14x PE with a 2% dividend yield, which is very attractive.

That said, one company stands out to us as the pick of the bunch.

Microsoft – little to lose

Periods of rapid technological change are often when the seeds of disruption are sown. Microsoft is uniquely positioned among the incumbents as it has little to lose from AI and so much to gain. CEO Satya Nadella has a formidable track record for capital allocation and is committed to pursuing AI aggressively, and Microsoft owns both the infrastructure (Azure) to run large scale AI and the software (M365 suite) to benefit from its integration and drive further AI growth.

Microsoft invested in Open AI in 2019, and again in 2021. It recently increased its stake to a multi-year investment rumoured to be around $10 billion.

Microsoft can create new value for existing users while potentially disrupting incumbents in other fields. Products like the recently released Teams Premium, which uses OpenAI models to annotate and summarise Teams meetings in real-time, will add value to an already successful suite of productivity solutions. And yet to be released products such as Microsoft Designer which infuses generative AI into the creative process, will compete in areas historically outside Microsoft’s reach; markets dominated by other software giants like Adobe. Here Microsoft can afford to be more innovative as it has relatively little to lose but much to gain.

Search

Search is another area where the risk/reward trade off is skewed to the upside for Microsoft. While it’s not yet clear how it will be monetised, AI powered search could complement monetised search. The principle of complementary goods proposes if you lower the cost of a complementary service or product, demand for your product increases.

Google has been exploiting this for years. It provides direct answers to queries such as “What’s the weather forecast?” even though it is not monetised. Google does this because the 80% of its queries that are not monetised drive growth in the 20% that are. The process creates a flywheel that has made Google’s search empire almost impenetrable.

But Microsoft has the opportunity to disrupt Google’s dominance through its first-to-market AI powered search with Bing. To be clear, we don’t believe it will replace Google anytime soon. However, Google’s Bard announcement on February 6 suggested its release is not imminent. 58% of Google’s revenue comes from search. For Microsoft, search accounts for only 6% of revenue. Consequently, Google has far more at stake and hence must approach AI search integration with caution.

GitHub CoPilot

Perhaps the most underappreciated aspect of Microsoft’s AI strategy is GitHub CoPilot. It was released publicly in June last year. Already industry insiders suggest it is writing between 40% and 80% of code for the developers who use it. This could lead to unprecedented efficiency gains per developer, at a time when many businesses are looking to cut costs or at least do more with less. In addition, we see CoPilot further democratising software development. It could become a tool not just for professional developers but for a much larger base of knowledge workers.

Known unknowns

The difficulty with investing in AI right now is the known unknowns. What will the industry look like in five years’ time? Who will be the winners and losers? With change occurring at such a rapid rate even some AI experts are being left behind.

We recommend treading with caution. But investing in the companies that provide the pick-and-shovel plays could be the best way to gain AI exposure without taking on unnecessary risks.

This article first appeared on Livewire Markets